おのたく日記 ![[RDF]](images/rdf.png) YouTubeも始めました→

YouTubeも始めました→

2018-11-11(Sun) [ZFS] DISKが壊れた? [長年日記] この日を編集

■ ZFSでエラー発生メールを受け取る

From: root

Subject: ZFS device fault for pool 0x9773EB28D655981A on on-o.com

The number of I/O errors associated with a ZFS device exceeded

acceptable levels. ZFS has marked the device as faulted.

impact: Fault tolerance of the pool may be compromised.

eid: 40

class: statechange

state: FAULTED

host: on-o.com

time: 2018-11-11 07:25:07+0900

vpath: /dev/disk/by-id/ata-TOSHIBA_DT01ACA300_66WYX9FS-part1

vguid: 0x1E626A2E7FA04A2D

pool: 0x9773EB28D655981A

マジですか? と思っていたら自動的にscrubも実行されて、メールが来た

Subject: ZFS scrub_finish event for tank on on-o.com

ZFS has finished a scrub:

eid: 43

class: scrub_finish

host: on-o.com

time: 2018-11-11 11:00:20+0900

pool: tank

state: DEGRADED

status: One or more devices are faulted in response to persistent errors.

Sufficient replicas exist for the pool to continue functioning in a

degraded state.

action: Replace the faulted device, or use 'zpool clear' to mark the device

repaired.

scan: scrub repaired 288K in 10h36m with 0 errors on Sun Nov 11 11:00:20 2018

config:

NAME STATE READ WRITE CKSUM

tank DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

ata-ST4000DM000-1F2169_Z997V2F2-part1 ONLINE 0 0 0

ata-TOSHIBA_DT01ACA300_66SWYX9FS-part1 FAULTED 0 0 9 too many errors

errors: No known data errors

というわけで、TOSHIBAの3TB HDDでチェックサムエラーが9回発生して、too many errorsでFAULTEDになっている。

詳しく見てみると

# zpool status -v

pool: tank

state: DEGRADED

status: One or more devices are faulted in response to persistent errors.

Sufficient replicas exist for the pool to continue functioning in a

degraded state.

action: Replace the faulted device, or use 'zpool clear' to mark the device

repaired.

scan: scrub repaired 288K in 10h36m with 0 errors on Sun Nov 11 11:00:20 2018

config:

NAME STATE READ WRITE CKSUM

tank DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

ata-ST4000DM000-1F2169_Z997V2F2-part1 ONLINE 0 0 0

ata-TOSHIBA_DT01ACA300_66SWYX9FS-part1 FAULTED 0 0 9 too many errors

errors: No known data errors

「zpool clear」してみろとのことなので、まずはSMARTと並行してエラーのクリアをしてみる

# zpool clear tank ata-TOSHIBA_DT01ACA300_66SWYX9FS-part1

# zpool status

pool: tank

state: ONLINE

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Mon Nov 12 22:53:58 2018

338G scanned out of 1.79T at 858M/s, 0h29m to go

5.44G resilvered, 18.37% done

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-ST4000DM000-1F2169_Z997V2F2-part1 ONLINE 0 0 0

ata-TOSHIBA_DT01ACA300_66SWYX9FS-part1 ONLINE 0 0 0 (resilvering)

errors: No known data errors

29分待てば良いのかと思いきや、いま見ると3時間待ちなので、SMARTテストも同時実行する。

■ SMART shortテスト

# smartctl -t short /dev/sdb

smartctl 6.6 2017-11-05 r4594 [x86_64-linux-4.18.0-2-amd64] (local build)

Copyright (C) 2002-17, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF OFFLINE IMMEDIATE AND SELF-TEST SECTION ===

Sending command: "Execute SMART Short self-test routine immediately in off-line mode".

Drive command "Execute SMART Short self-test routine immediately in off-line mode" successful.

Testing has begun.

Please wait 1 minutes for test to complete.

Test will complete after Mon Nov 12 22:47:49 2018

Use smartctl -X to abort test.

# smartctl -l error /dev/sdb

smartctl 6.6 2017-11-05 r4594 [x86_64-linux-4.18.0-2-amd64] (local build)

Copyright (C) 2002-17, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF READ SMART DATA SECTION ===

SMART Error Log Version: 1

No Errors Logged

# smartctl -l selftest /dev/sdb

smartctl 6.6 2017-11-05 r4594 [x86_64-linux-4.18.0-2-amd64] (local build)

Copyright (C) 2002-17, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF READ SMART DATA SECTION ===

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Short offline Completed without error 00% 42719 -

# 2 Short offline Completed without error 00% 32323 -

# 3 Extended offline Aborted by host 90% 32323 -

# 4 Short offline Completed without error 00% 25958 -

# 5 Short offline Completed without error 00% 25958 -

とりあえず、shortテストはOK

■ SMART offlineテスト

# smartctl -t offline /dev/sdb

smartctl 6.6 2017-11-05 r4594 [x86_64-linux-4.18.0-2-amd64] (local build)

Copyright (C) 2002-17, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF OFFLINE IMMEDIATE AND SELF-TEST SECTION ===

Sending command: "Execute SMART off-line routine immediately in off-line mode".

Drive command "Execute SMART off-line routine immediately in off-line mode" successful.

Testing has begun.

Please wait 23082 seconds for test to complete.

Test will complete after Tue Nov 13 05:12:42 2018

Use smartctl -X to abort test.

朝までかかるので、果報は寝て待つ

2018-11-12(Mon) [長年日記] この日を編集

■ [ZFS][HDD] HDDやはり壊れた

ZFSからエラーのメールを頂いていたのを、昨日の日記で書いたけど、今日はSMARTからもメールが来た。

# smartctl -l error /dev/sdb

smartctl 6.6 2017-11-05 r4594 [x86_64-linux-4.18.0-2-amd64] (local build)

Copyright (C) 2002-17, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF READ SMART DATA SECTION ===

SMART Error Log Version: 1

ATA Error Count: 2

CR = Command Register [HEX]

FR = Features Register [HEX]

SC = Sector Count Register [HEX]

SN = Sector Number Register [HEX]

CL = Cylinder Low Register [HEX]

CH = Cylinder High Register [HEX]

DH = Device/Head Register [HEX]

DC = Device Command Register [HEX]

ER = Error register [HEX]

ST = Status register [HEX]

Powered_Up_Time is measured from power on, and printed as

DDd+hh:mm:SS.sss where DD=days, hh=hours, mm=minutes,

SS=sec, and sss=millisec. It "wraps" after 49.710 days.

Error 2 occurred at disk power-on lifetime: 42730 hours (1780 days + 10 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

40 51 d8 a0 a2 d4 0f Error: UNC 216 sectors at LBA = 0x0fd4a2a0 = 265593504

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

25 00 00 78 a2 d4 e0 08 3d+14:16:29.677 READ DMA EXT

35 00 58 50 e7 79 e0 08 3d+14:16:29.677 WRITE DMA EXT

b0 d0 01 00 4f c2 00 08 3d+14:16:29.489 SMART READ DATA

35 00 90 e0 75 48 e0 08 3d+14:16:29.489 WRITE DMA EXT

ef 10 02 00 00 00 a0 08 3d+14:16:29.489 SET FEATURES [Enable SATA feature]

Error 1 occurred at disk power-on lifetime: 42722 hours (1780 days + 2 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

84 51 ba 46 83 61 08 Error: ICRC, ABRT at LBA = 0x08618346 = 140608326

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

61 00 80 00 83 61 40 08 3d+06:13:43.240 WRITE FPDMA QUEUED

61 00 50 00 82 61 40 08 3d+06:13:43.240 WRITE FPDMA QUEUED

61 00 78 00 81 61 40 08 3d+06:13:43.239 WRITE FPDMA QUEUED

61 a0 d8 10 d8 35 40 08 3d+06:13:43.239 WRITE FPDMA QUEUED

61 80 48 50 73 10 40 08 3d+06:13:43.239 WRITE FPDMA QUEUED

というとこなので、2013年12月の日記から、5年近く使ったHDDも交換だね。3TB→8TBにする。

2018-11-14(Wed) [長年日記] この日を編集

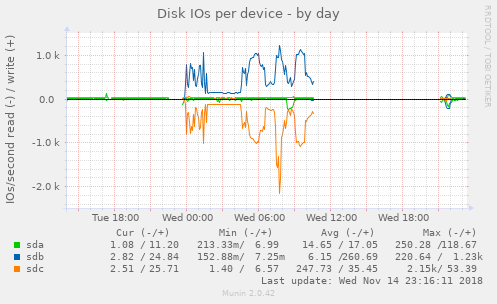

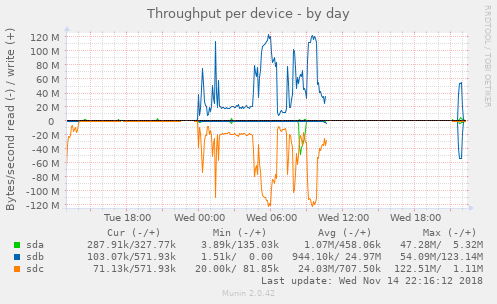

■ [ZFS][HDD] ディスク交換 3TB→8TB

先日の日記のようにHDDが壊れたようで8セクターが読めなくなったのでHDD交換をする。

従来は、3TBと4TBのzfs mirrorで、生き残った4TBを最大容量使っていないので、ミラーの生きている方を最大容量使え切るようにpartedで1MiB単位でzfs poolを最大化してからHDDを物理的に入れ替えた。

マルチユーザでブートしてから、gpartedでパテーションテーブル作ったりパティーションを未フォーマットで切った。

このへんは、昔と違ってサーバをマルチユーザで完全に起動した状態になってからGUIベースでできるので助かる。

で、zfsの玉のリプレース。リプレースするとミラーのリビルドも自動的に走る。

# zpool replace tank 2189429116797733421 ata-TOSHIBA_MD05ACA800_ZZRCK0MSZZZZ-part1

# zpool status -v

pool: tank

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Tue Nov 13 23:50:17 2018

19.2G scanned out of 1.79T at 16.1M/s, 32h10m to go

19.2G resilvered, 1.04% done

config:

NAME STATE READ WRITE CKSUM

tank DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

ata-ST4000DM000-1F2168_Z307V2E2-part1 ONLINE 0 0 0

replacing-1 DEGRADED 0 0 0

2189429116797733421 UNAVAIL 0 0 0 was /dev/disk/by-id/ata-TOSHIBA_DT01ACA300_66SWYX9FS-part1

ata-TOSHIBA_MD05ACA800_ZZRCK0MSZZZZ-part1 ONLINE 0 0 0 (resilvering)

errors: No known data errors

ってわけで、ミラーのリビルドには一日かかる模様。

|

|