おのたく日記 ![[RDF]](images/rdf.png) YouTubeも始めました→

YouTubeも始めました→

2024-07-18(Thu) [長年日記] この日を編集

■ [Kindle][Debian] Wine HQ更新

2022年8月1日の日記で、WineではKindle for PCがうまく動かないのでWine HQパッケージをDebianにインストールしましたが、最近のt64の影響でパッケージの依存関係がうまくいかず、アンインストールしてしまいました。

そろそろt64も安定したので、再インストールするために、「Debian WineHQ Repository」を見に行くと、リポジトリキーやその置き場所が少し変わっていました。

# wget -O /etc/apt/keyrings/winehq-archive.key https://dl.winehq.org/wine-builds/winehq.key

# wget -NP /etc/apt/sources.list.d/ https://dl.winehq.org/wine-builds/debian/dists/trixie/winehq-trixie.sources

# rm /usr/share/keyrings/winehq-archive.key

最後の行は古いリポジトリキーのファイルの削除。

そして、

# apt update

# apt install --install-recommends winehq-stable

という感じでWine HQがインストールできました。

2024-07-07(Sun) [長年日記] この日を編集

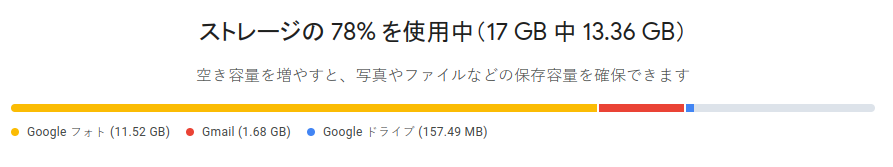

■ GooglePhotoをエクスポートしたら容量52倍

11.52GBのGoogleフォトを整理しようと思ってバックアップのためエクスポートしたら、60倍の699GBになりました。

写真としては、11.52GBしかないはずなのに・・・

あ、そういえば「2021年6月1日から容量が無制限でなくなり、無料で利用できる容量は最大15GBに制限されます」と言っていたんだ。2021年6月1日以降は11.52GBだけど、それ以前の10年分が600GB以上あるってことか。

2024-07-06(Sat) [長年日記] この日を編集

■ UPSバッテリー交換

先日の日記で書いたように、UPSのバッテリーが死にそうでしたが、とうとうUPSのアラームとともに電源が突然切れました。UPSの電源を入れ直すと通電しましたが、ステータスはNOBATTERYでバッテリーが認識されませんでした。

UPSを後継機のBR400S-JPなどに交換しても良いかと考えましたが、17,000円近くするため、2015年1月以来9年ぶりに今回もバッテリー交換で済ますことにしました。

https://www.amazon.co.jp/dp/B06XJ24QWJ

メーカーのページ「バッテリー交換手順 APC ES (BE550G-JP) : サポート終了製品」によるとサポートは終了していましたが、APC ES500(製番BE500JP)用のRBC2J-S互換バッテリーがアマゾンで販売されていました。

https://www.amazon.co.jp/dp/B01M3OJV0J

ドライバーでネジを外して蓋をスライドするとバッテリーが見えるので、シールを引っ張って取り出し交換しました。長年の使用でバッテリーが膨らんでいたため取り出すのが大変でした。また取り出しが難しいと困るので、シールを新しいものに貼り替えておきました。

電源を再投入すると無事に起動しました。

# apcaccess

APC : 001,034,0816

DATE : 2024-07-05 23:49:38 +0900

HOSTNAME : mirara

VERSION : 3.14.14 (31 May 2016) debian

UPSNAME : mirara

CABLE : USB Cable

DRIVER : USB UPS Driver

UPSMODE : Stand Alone

STARTTIME: 2024-07-05 23:43:52 +0900

MODEL : APC ES 500

STATUS : ONLINE

LINEV : 101.0 Volts

LOADPCT : 16.0 Percent

BCHARGE : 14.0 Percent

TIMELEFT : 6.2 Minutes

MBATTCHG : 5 Percent

MINTIMEL : 3 Minutes

MAXTIME : 60 Seconds

SENSE : High

LOTRANS : 90.0 Volts

HITRANS : 113.0 Volts

ALARMDEL : No alarm

BATTV : 13.4 Volts

LASTXFER : No transfers since turnon

NUMXFERS : 0

TONBATT : 0 Seconds

CUMONBATT: 0 Seconds

XOFFBATT : N/A

STATFLAG : 0x05000008

SERIALNO : 3B0829X86930

BATTDATE : 2015-01-17

NOMINV : 100 Volts

NOMBATTV : 12.0 Volts

FIRMWARE : 803.p6.A USB FW:p6

END APC : 2024-07-05 23:49:47 +090

ということで無事にステータスがONLINEになって充電が開始されている。11V代まで落ちていた電圧も13.4Vで上々。しかし、バッテリーの交換日付が2015年1月17日のままになっているので、更新する。

# systemctl stop apcupsd.service

# apctest

2024-07-05 23:50:46 apctest 3.14.14 (31 May 2016) debian

Checking configuration ...

sharenet.type = Network & ShareUPS Disabled

cable.type = USB Cable

mode.type = USB UPS Driver

Setting up the port ...

Doing prep_device() ...

You are using a USB cable type, so I'm entering USB test mode

Hello, this is the apcupsd Cable Test program.

This part of apctest is for testing USB UPSes.

Getting UPS capabilities...SUCCESS

Please select the function you want to perform.

1) Test kill UPS power

2) Perform self-test

3) Read last self-test result

4) View/Change battery date

5) View manufacturing date

6) View/Change alarm behavior

7) View/Change sensitivity

8) View/Change low transfer voltage

9) View/Change high transfer voltage

10) Perform battery calibration

11) Test alarm

12) View/Change self-test interval

Q) Quit

Select function number: 4

Current battery date: 01/17/2015

Enter new battery date (MM/DD/YYYY), blank to quit: 07/05/2024

Writing new date...SUCCESS

Waiting for change to take effect...SUCCESS

Current battery date: 07/05/2024

としてバッテリー交換日付を更新しました。ついでに、電灯線電圧の範囲も以前広げた90〜113Vから、この一年の様子を見ていると、98〜107Vの範囲なので93V〜110Vに変更しました。下は95Vにしたかったのですが、93V以上には設定できないみたいでした。

2024-06-28(Fri) [長年日記] この日を編集

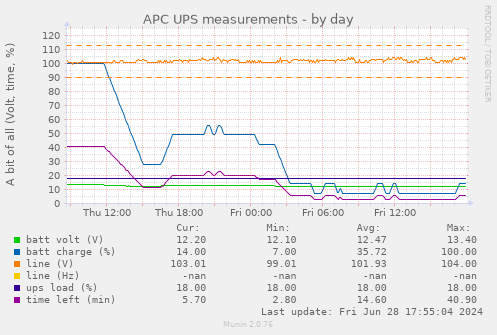

■ UPS無停電電源装置の電池が弱ってきている

2009年1月5日の日記で交換した、アマゾンで8,600円で買ったAPC ES500(製番BE500JP)だけど、突然、電源につないでいるのに電気残量が減ってきて7%とかになっている。

2015年1月にピーピー鳴るので電池を交換したけど、あの時は電池を交換しても鳴ることがあり、調べてみると電池ではなくて、電源電圧変動の警告だった。あの時は、引っ越したばかりで、まさか日本でもそんなに変動があるとは思わず、電池を交換してしまった。

今回は、引っ越してから2年以上経つのでそんなことはなさそうで、10年近くなるので電池が死んだかな?

買い替えるならば、APC BR400S-JPかな? 約17,000円かぁ。電池の価格を見ても高くなっているしUPSも高くなったのは、その辺の影響か? それとも正弦波とか機能と性能が上がったからか?

2024-05-19(Sun) [長年日記] この日を編集

■ GitLab 17.0へのアップグレード時のOmnibus Dockerイメージのエラー解決

概要:

GitLab Omnibus Dockerイメージで、ci_pipelinesテーブルの制約ci_pipelines_pkeyを削除できないというエラーが発生しました。この問題は、他のオブジェクトがこの制約に依存しているため削除できなかったことに起因します。

gitlab-ce-1 | PG::DependentObjectsStillExist: ERROR: cannot drop constraint ci_pipelines_pkey on table ci_pipelines because other objects depend on it

gitlab-ce-1 | DETAIL: constraint fk_rails_a2141b1522 on table ci_builds depends on index ci_pipelines_pkey

gitlab-ce-1 | constraint fk_rails_a2141b1522 on table p_ci_builds depends on index ci_pipelines_pkey

gitlab-ce-1 | HINT: Use DROP ... CASCADE to drop the dependent objects too.

解決方法:

従属オブジェクト(外部キー)を特定し、削除しました。具体的には、以下の手順を踏みました。

1. docker compose gitlab-ce gitlab-psqlコマンドでPostgreSQLコンテナに接続しました。

2. 以下のSQLコマンドを実行し、従属制約を削除しました。

SQL

ALTER TABLE ci_builds DROP CONSTRAINT fk_rails_a2141b1522;

ALTER TABLE p_ci_builds DROP CONSTRAINT fk_rails_a2141b1522;

結果:

上記手順によりエラーを解決し、GitLab Omnibus Dockerイメージを正常に起動できました。

教訓:

今回の経験から、以下の教訓を得ました。

- GitLab Omnibus Dockerイメージでエラーが発生した場合、公式ドキュメントやフォーラムスレッドを参照することで解決策を見つけられることが多い

- データベースに重大な変更を加える前には、必ずデータのバックアップを取る

- 問題が発生した場合は、慌てず原因を特定し、適切な解決策を講じることが重要である

実は、解決方法をGemini 1.5 Proに聞いて、日記も書いてもらい。GPT-4でこの日記を構成してもらったんだけどね。

2024-05-17(Fri) [長年日記] この日を編集

■ 十年以上前にカスタムファームウェアなどで遊んだPSP、久しぶりに引っ張り出してみたんだ。でも、画面が小さいと老眼にはキツい!そこで、LinuxでPSPをエミュレートできるPPSSPPを使うことにした。

うちではDebianを使っているんだけど、PPSSPP 1.13の頃はUbuntuにしかパッケージがなくて、Ubuntuのパッケージで動かしていたんだよね。久しぶりにPPSSPPのホームページを見たら、最近はflathubで動かすのが主流らしい。

そこで、Debian用のflatpak Debianバッケージはインストールして、flathubのリポジトリを登録してPPSSPPをインストールしてみた。

flatpak remote-add -u --if-not-exists flathub https://dl.flathub.org/repo/flathub.flatpakrepo

flatpak install flathub org.ppsspp.PPSSPP

flatpak run org.ppsspp.PPSSPP

これでPPSSPPを起動!

おお、ちゃんと動いた!これで大きな画面でPSPのゲームを楽しめる。これで快適なPSPライフを送れそうだ!

今後、更新する時は、

flatpak update

おまけ

たまに使うコマンドもメモしておこう。

flatpakのリポジトリ一覧: flatpak remotes

flatpakでインストールされているもの一覧: flatpak list

2024-05-16(Thu) [長年日記] この日を編集

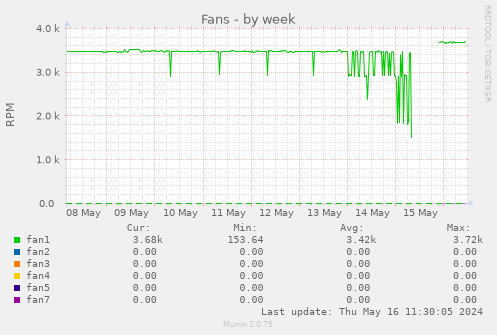

■ 9年ぶりのマザーボード交換 その後

先日、9年ぶりに自宅サーバーのマザーボードを交換しました。交換後、HDDとSSD以外のバックアップ用部品を持っていないことに気づき、少し不安を感じています。電源、BD-Rドライブ、マザーボードなどの予備がないのは心もとないですね。

停止原因の分析:

ログを確認したところ、やはり電源の調子が悪かったようです。ケースファンの回転数の記録を見ると、従来は最大回転数で回っていたのに、壊れる24時間くらい前から回転数が安定しなくなっていました。

不安定なメモリ:

メモリはPC-2666を使っていますが、マザーボードの制限でPC-2400しか出ず、相性も良くありません。実際、2モジュールで32GBではメモリエラーが出てしまい、16GBでしか運用できていませんでした。

そこで、マザーボードの仕様通りのDDR4 PC-2400 16GBx2枚をAmazonで1万円足らずで購入し、交換しました。

新しいマザーボードの性能:

CPU周波数は2.4GBから3.0GBに25%向上し、Bogomipsも3200から3993と1.25倍良くなったのは書いたとおりです。

しかし、MIPSはVAX-11の1 MIPSの頃に作られた基準であり、Pentium IIの66 MIPS以降は、性能比較としては精度が良くないと言われています。

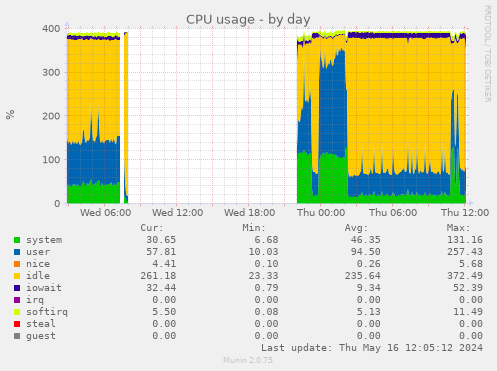

muninのデータ収集の処理時間を見ると、体感では倍くらいの性能になっていると感じます。実際、やっていることは変わっていませんがCPU利用率などを見ると半分くらいになっています。

2024-05-15(Wed) [長年日記] この日を編集

■ 今日は仕事中に、いつもと違う音に気づいた。換気扇の音がやけに大きく感じられたのだ。最初は、換気扇にホコリが詰まってきているのかと思ったが、よく考えてみると、いつもはおうちサーバのHDDの音がしているはずだった。

確認してみると、おうちサーバが止まっていたことが判明。ログを確認すると、朝の7:15頃にダウンして7:21に再起動しているが、その後は音信不通の状態だった。電源を入れても、すぐに落ちてしまう。ケースを開けて調査を進めると、マザーボードからHDDなどを外した状態で電源を入れると、かろうじて電源とケースのファンが回るが、起動はしなかった。

電源ユニットが原因かもしれないと思い、 2010年10月28日の日記以来、13年以上使っていたエバーグリーンのPower Glitter2 (EG-525PG2) 525Wの電源を、玄人志向のKRPW-BR550W/85+ 80PLUS BRONZE取得の550W電源に交換することにした。しかし、新しい電源に交換しても起動しなかった。

次に、2015年8月19日の日記で交換したマザーボードN3700-ITXを、2021年5月にドスパラで購入したJ5040に交換してみた。当時はUEFI BIOS対応ができておらず、ブートに手間取ったため放置していたものだ。しかし、initrdの読み込みで止まってしまった。以前も気になっていたが、J5040のメモリの調子が悪いのかもしれない。

そこで、いつかはやろうと2021年6月に焼いておいたmemtest86 CD-ROMやSuper Grub2 CD-ROMでメモリテストをしようとしたが、CD-ROMからブートできなかった。そういえば使用頻度が少ないBD-Rドライブの読み込みの調子が悪かったんだ。

そこで、PIONEER BD-RW BDR-207D 1.60を日立LGのHL-DT-ST BD-RE BH16NS58 1.03に交換した。BD-R16倍速書込で変わらないか。

Super Grub2 CD-ROMでブートしてHDDに以前入れておいたmemtest86を実行すると、メモリエラーが発生。

一枚のメモリを外してテストを続けたところ、一時間以上問題なく動作したので、Debianのブートもできた。

32GBがいけないのか? 外したメモリが故障しているのか? の判別のために、メモリを入れ替えてテストを実施。TEST2までは問題なかったので、8GB MAXマザーボードに32GBにしたのがいけかったかのかと思い。一時間も待てなかったのでメモリテストを途中でやめて、Debianをブートしようとした。

しかし、BIOSチェックがFailしたりして調子が悪く、Debianは起動できなかった。

最終的に、調子が良かったメモリに戻して無事に起動成功。eth0がeno1になっていたので、/etc/udev/rules.d/70-persistent-net.rulesでeth0に修正。eth0のpre-upが自動的に動作していなかったが、これは後で修正する予定。

■ 電源交換のつもりが、マザーボードもBD-Rドライブも交換になってしまって、長い一日だったが、なんとかサーバは動くようになった。まだ調整が必要だが、一歩前進だ。

結果として、交換したといっても最新マザーボードではなく、箱に3年間も入れたままだった2021年のマザーボードだけど、HDDポートが4つある省電力CPUが出なくなっていることもあって9年近くぶりのマザーボート交換。

bogomipsは、3200から3993.6と1.25倍の性能向上。

メモリはDDR4 SO-DIMM PC-2666を32GB 用意しておいたけど、マザーはPC-2400が最大ということもあってか何が原因かわからないけど16GBしか使えなかったので、メモリの増加はなし。

rocessor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 122

model name : Intel(R) Pentium(R) Silver J5040 CPU @ 2.00GHz

stepping : 8

microcode : 0x24

cpu MHz : 2995.189

cache size : 4096 KB

physical id : 0

siblings : 4

core id : 0

cpu cores : 4

apicid : 0

initial apicid : 0

fpu : yes

fpu_exception : yes

cpuid level : 24

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf tsc_known_freq pni pclmulqdq dtes64 monitor ds_cpl vmx est tm2 ssse3 sdbg cx16 xtpr pdcm sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave rdrand lahf_lm 3dnowprefetch cpuid_fault cat_l2 cdp_l2 ssbd ibrs ibpb stibp ibrs_enhanced tpr_shadow flexpriority ept vpid ept_ad fsgsbase tsc_adjust smep erms mpx rdt_a rdseed smap clflushopt intel_pt sha_ni xsaveopt xsavec xgetbv1 xsaves dtherm ida arat pln pts vnmi umip rdpid md_clear arch_capabilities

vmx flags : vnmi preemption_timer posted_intr invvpid ept_x_only ept_ad ept_1gb flexpriority apicv tsc_offset vtpr mtf vapic ept vpid unrestricted_guest vapic_reg vid ple shadow_vmcs ept_mode_based_exec tsc_scaling

bugs : spectre_v1 spectre_v2 spec_store_bypass rfds

bogomips : 3993.60

clflush size : 64

cache_alignment : 64

address sizes : 39 bits physical, 48 bits virtual

power management:

2024-04-14(Sun) [長年日記] この日を編集

■ この日記止まっていた

tDiaryのDebianバッケージが5.2.3から5.3.0に上がって、contribの生成AI推敲とか入ったかな? とか楽しみにしていたのだけど、動作確認していなかったら、なんと日記システムが動いていなかった。

Googleさんに「あんたんところインデックスできないよ?」とメールで教えてもらえて発見。

uninitialized constant Rackup

::Rackup::Handler::CGI.send_body(body)

^^^^^^^^ (NameError)

/usr/share/tdiary/index.rb:35:in `<top (required)>'

<internal:/usr/lib/ruby/vendor_ruby/rubygems/core_ext/kernel_require.rb>:86:in `require'

<internal:/usr/lib/ruby/vendor_ruby/rubygems/core_ext/kernel_require.rb>:86:in `require'

index.rb:7:in `<main>'

とエラーが出ていた。

どうやら、従来Rackを使っていたところが、最新版ではRackupを使うようになったんだけど、ruby-rackupは、まだSidしかないこともあり、入っていなかった。

ruby-rackupパッケージを入れようとしたら、依存関係が解決できず、しかたがないので、tdiary 5.2.3にダウングレードした。

2024-01-26(Fri) [長年日記] この日を編集

■ TeamsでMute解除のために2キーマクロ可能キーボードを買いました。

ZoomではスペースキーでMute解除ができますが、Teamsの会議では特許の関係でミュート解除がCtrl-Shift-Mになっています。

マウスをクリックしたり、Ctrl-Shift-Mを押すのに時間がかかるので、一発キーでできそうなマクロ可能なキーボードを購入しました。

1つのキーで良いのですが、1つでも値段は変わらず、さまざまな種類があります。300円のものもありますが、送料が1,500円かかるものもあるので、Amazon Primeで1,299円のものを選びました。

キートップにCopyやPasteなどの文字が書いてあるのは気にしません。

■ ミニキーボードにマクロを登録する方法

AmazonのページにはWindows用のマクロ登録プログラムのURLが書いてあり、同梱のマニュアルにはQRコードが付属していますが、私はLinuxを使用しているので、

$ lsusb

...

Bus 001 Device 109: ID 1189:8890 Acer Communications & Multimedia

上記のコマンドを実行し、1189:8890を検索した結果、GitHubでkriomant/ch57x-keyboar-toolを見つけました。

README.mdによると、このタイプのミニキーボードはみなさんが1189:8890を使用しているようです。

ビルドおよびインストールツールとしてRustupというものが必要なようなので、Debianパッケージをインストールします。

$ rustup default stable

$ cargo install ch57x-keyboard-tool

上記のコマンドを実行すると、ビルドされたファイルが~/.cargo/bin/ch57x-keyboard-toolにインストールされます。

MINI KeyBoardはレイヤーがなく、横に並んだ2つのキーがありますので、以下のようなファイルを作成します。

orientation: normal

rows: 1

columns: 2

knobs: 0

layers:

- buttons:

- [ "<179>", "ctrl-shift-m" ] # 好きなキーに

knobs:

上記の設定をyour-config.yamlという名前のファイルに保存し、以下のコマンドで確認および書き込みを行います。

$ ~/.cargo/bin/ch57x-keyboard-tool validate < your-config.yaml # 確認

$ sudo ~/.cargo/bin/ch57x-keyboard-tool upload < your-config.yaml # 書き込み

これで簡単にMute解除ができるようになり便利です。

|

|